Nowaday, a lot enterprises are implemeting a Data Center which it is centralized by all servvice , they are deploying a lot of services inside a Data Center, one of some services that is Call Center (Contact Center) using on technology of Voice over IP. In order to always make sure for voice traffic we need to apply QoS on whole enterprise's network, this article will mention this issue.

Quality of Service (QoS) is being deployed by most VoIP service providers today in order for such enterprises to efficiently use its bandwidth in an integrated network. These service providers expect their respective clients to implement QoS on their LAN in order to maximize the bandwidth resources at their disposal and ensure a flawless and high quality VoIP for its use. With the necessary equipment in the LAN, the network administrator with the required knowledge in QoS design and implementation can configure the various routers and switches to give priority to voice in the LAN.

This is focuses on the implementation of Quality of Service (QoS) in a LAN that extends over an IP/MPLS (WAN) network. QoS was configured on all the switches in a particular network in order for priority to be given to the Voice packets going through this network. The aim is to show that although QoS is not so important in a LAN within a Region, it becomes important when that LAN extends to other Regions over an IP/MPLS network.

Quality of Service (QoS) is the ability of a networking equipment to differentiate among different classes of traffic and to give each class different priority over the network when there is congestion in the network based on the traffic significance. QoS is not something that will be configured on a router or switch, rather it is a term that refers to a wide variety of mechanism used to influence traffic patterns on a network. It gives network administrators the ability to give some traffic more priority over others.

Models of QoS:

The purpose of QoS usage is to make sure that minimum bandwidth is guaranteed for identified traffic, jitter and latency is being controlled and packet loss is improved. This can be carried out using several means either QoS congestion management, congestion avoidance or policing and traffic shaping. The means chosen depends largely on the goal of the network administrator.

QoS can be divided into three different models:

- The Best Effort model. (a default mode)

- Integrated Services (IntServ)

- Differentiated Services (DiffServ)

A default model that comes with all networking devices is the Best Effort model. It does not require any QoS configuration.

DiffServ provides the need for simple and coarse methods of putting traffic into classes, called Class of Service (COS). It does not specify that a specific protocol should be used for providing QoS but specifies an architectural framework for carrying out its function. DiffServ carries out its major function through a small, well-defined set of building blocks from which different aggregates of behaviours can be built. The packet Type of Service (TOS) byte in the IP header is marked in order for the packets to be divided into different classes which forms the aggregate behaviours[5]. Differentiated Services Code Point (DSCP) is a 6-bit bit pattern in the IPv4 TOS Octet or the IPv6 traffic class Octet [5]. DSCP supports up to 64 aggregates or classes and all classification and QoS in the DiffServ model revolves around the DSCP.

QoS for Voice:

Voice packets have minimal needs for delay. Voice packets are delay sensitive and a little delay in the transmitting of voice packets can cause so much discomfort to a user.

In the case of VoIP, the major factors that will affect its quality are packet loss and packet delay.

Lost packets: Usually when data is being sent over an IP network some packets can be lost. Using TCP protocol, the lost packet can be resent but in the case of VoIP, it does use UDP. So any packet lost cannot be retransmitted.

Delay Packet (Latency): Packet delay is the time it takes a packet to reach the receiving end of an endpoint after it has been transmitted from the source. This is called end-to-end delay. It consists of two components: fixed network delay and variable delay. Packet delay can cause degradation of voice quality due to the end-to-end voice latency or packet loss if the delay is variable. The end-to-end voice latency must not be longer than 250ms because it will make the conversation sound like two parties talking on a CB radio. If latency must be taken care of, then we have to know the main causes of latency. They are: codecs, queuing, waiting for packets being transmitted, serialization, jitter buffer and others.

In spite of the fact that voice will be transferred via network, the network between data centres and contact centres must support following minimal requirements:

+ Packet loss less than 1%

+ Network latency less than 50 ms

+ Jitter less than 20 ms

In the case of VoIP, the major factors that will affect its quality are packet loss and packet delay.

Lost packets: Usually when data is being sent over an IP network some packets can be lost. Using TCP protocol, the lost packet can be resent but in the case of VoIP, it does use UDP. So any packet lost cannot be retransmitted.

Delay Packet (Latency): Packet delay is the time it takes a packet to reach the receiving end of an endpoint after it has been transmitted from the source. This is called end-to-end delay. It consists of two components: fixed network delay and variable delay. Packet delay can cause degradation of voice quality due to the end-to-end voice latency or packet loss if the delay is variable. The end-to-end voice latency must not be longer than 250ms because it will make the conversation sound like two parties talking on a CB radio. If latency must be taken care of, then we have to know the main causes of latency. They are: codecs, queuing, waiting for packets being transmitted, serialization, jitter buffer and others.

In spite of the fact that voice will be transferred via network, the network between data centres and contact centres must support following minimal requirements:

+ Packet loss less than 1%

+ Network latency less than 50 ms

+ Jitter less than 20 ms

QoS can be used to prevent a voice packet from waiting on other packets before it is transmitted. QoS makes the voice to be sent out of queues faster than other packets in the queue and by giving more bandwidth to voice, it helps to mitigate against serialization delay.

In essence, QoS for the voice packets reduces latency, uses bandwidth well and delivers voice packets fast. Using Low Latency Queuing (LLQ) or Priority Queuing-Weighted Fair Queuing (PQ-WFQ) commands to give priority to voice is the preferred method of configuring QoS.

QoS Implementation

QoS implementation, as was earlier mentioned, can be a simple or complex task depending on several factors like the QoS features offered by the networking devices, the traffic types and pattern in the network and level of control that need to be exercised over incoming and outgoing traffic. The network engineer cannot even be able to exercise so much control over the network traffic beyond the QoS features in the networking devices.

The DiffServ model allows network traffic to be broken down into small flows for appropriate marking. This small flow is called a class. Thus, the network recognises traffic as a class instead of the network receiving specific QoS request from an application. The devices along the path of the flow are able to recognise the flow because these flows are marked. So the marked flow is given appropriate treatment by the various devices on the network.

When the packet has been properly marked and identified by the router or switch, it is given special treatment called Per Hop Behaviour (PHB) by these devices. PHB identifies how the packets are treated at each hop. There are three standardized PHB currently in use:

a. Default PHB: This uses best-effort forwarding to forward packets

b. Expedited Forwarding (EF): It guarantees that each DiffServ node gives low-delay, low-jitter and low-loss to any packet marked with EF. It is used majorly for Real-time Transport Protocol (RTP) applications, like voice and video.

c. Assured Forwarding (AF): It gives lower level functions compared with EF. It is used mostly for mission-critical applications or any application that are not too sensitive to delay but want assurance that the packets will be delivered by the network.

There are several tools used in implementing QoS. These are some tools used in QoS implementation:

1. Congestion Management:

Queuing is meant to accommodate temporary congestion on an interface of a network device by storing the excess packets until there is enough bandwidth to forward the packets. Sometimes some packets are dropped when the queue depth is full. Congestion management allows the administrator to control congestion by determining how and when the queue depth is full. There are several ways this can be done. These are some ways congestion management can be implemented:

a. Priority Queuing (PQ): This allows the administrator to give priority to certain traffic while allowing other to be dropped when the queue depths are full.

b. Custom Queuing (CQ): This allows the administrator to reserve queue space in the router or switch buffer for all traffic types.

c. Weighted Fair Queuing (WFQ): This allows the sharing of bandwidth with prioritization given to some traffic.

d. Class-based Weighted Fair Queuing (CBWFQ): This extends the functionality of WFQ to provide support for user-defined classed.

e. Low Latency Queuing (LLQ): This is a combination of CBWFQ and PQ. It is able to give traffic that requires low-delay the required bandwidth it needs while also giving data the needed bandwidth. It solves the starvation problem associated with PQ.

a. Priority Queuing (PQ): This allows the administrator to give priority to certain traffic while allowing other to be dropped when the queue depths are full.

b. Custom Queuing (CQ): This allows the administrator to reserve queue space in the router or switch buffer for all traffic types.

c. Weighted Fair Queuing (WFQ): This allows the sharing of bandwidth with prioritization given to some traffic.

d. Class-based Weighted Fair Queuing (CBWFQ): This extends the functionality of WFQ to provide support for user-defined classed.

e. Low Latency Queuing (LLQ): This is a combination of CBWFQ and PQ. It is able to give traffic that requires low-delay the required bandwidth it needs while also giving data the needed bandwidth. It solves the starvation problem associated with PQ.

2. Traffic shaping and traffic Policing:

Traffic shaping and policing are mechanisms used to control the rate of traffic. The main difference between them depends on the terms of implementation. While traffic policing drops excess traffic or remarks the traffic in order to control traffic flow within a specific rate limit without introducing any delay to traffic, traffic shaping retains excess traffic in a queue and then schedules such traffic for later transmission over an increment of time.

Since the traffic in this particular network was divided into two classes: the voice and others by the network administrator, it is important that LLQ and traffic policing is used to implement the various configurations in the different switches. How then is QoS implemented in a network? It starts with preparation.

Preparing to Implement the QoS model:

a. Identifying types of traffic and their requirement. (the traffic required can be identified, the range of ports that are to be configured, to know the business importance of all traffic in a network)

b. Dividing traffics into classes. (The identified traffic is divided into two classes: Voice and Others. The Voice class is given low latency while Others will be configured with guaranteed delivery.)

c. Defining QoS policies for each class. (Defining the QoS policy involves one or more of the following activities: setting a minimum bandwidth guarantee, setting a maximum bandwidth limit, assigning a priority to each class and using QoS technology to manage congestion.)

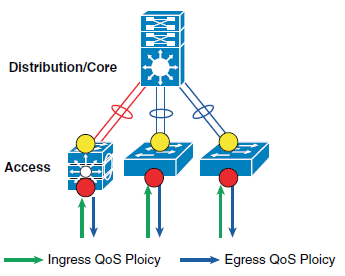

QoS needs to be designed and implemented considering the entire network. This includes defining trust points, and determining which policies to enforce at each device within the network. Developing the trust model, guides policy implementations for each device.

While design principles are common, QoS implementation varies between fixed-configuration switches and the modular switching platforms

This section discusses the internal switching architecture and the differentiated QoS structure on a per-hop-basis.

The devices (routers, switches) within the internal network are managed by the system administrator, and hence are classified as trusted devices. Access-layer switches communicate with devices that are beyond the network boundary and within the internal network domain. QoS trust boundary at the access-layer communicates with various devices that could be deployed in different trust models (Trusted, Conditional-Trusted, or Un-Trusted). This section discusses the QoS policies for the traffic that traverses access-switch QoS trust boundary. The QoS function is unidirectional; it provides flexibility to set different QoS polices for traffic entering the network versus traffic that is exiting the network.

QoS Trust Boundary

The access-switch provides the entry point to the network for end devices. The access-switch must decide whether to accept the QoS markings from each endpoint, or whether to change them. This is determined by the QoS policies, and the trust model with which the endpoint is deployed.

End devices are classified into one of three different trust models; each with it's own unique security and QoS policies to access the network:

• Untrusted—An unmanaged device that does not pass through the network security policies. For example, student-owned PC or network printer. Packets with 802.1p or DSCP marking set by untrusted endpoints are reset to default by the access-layer switch at the edge. Otherwise, it is possible for an unsecured user to take away network bandwidth that may impact network availability and security for other users.

• Trusted—Devices that passes through network access security policies and are managed by network administrator. For example, secure PC or IP endpoints (i.e., servers, cameras, DMP, wireless access points, VoIP/video conferencing gateways, etc). Even when these devices are network administrator maintained and secured, QoS policies must still be enforced to classify traffic and assign it to the appropriate queue to provide bandwidth assurance and proper treatment during network congestion.

• Conditionally-Trusted—A single physical connection with one trusted endpoint and a indirect untrusted endpoint must be deployed as conditionally-trusted model. The trusted endpoints are still managed by the network administrator, but it is possible that the untrusted user behind the endpoint may or may not be secure. For example, Cisco Unified IP Phone + PC. These deployment scenarios require hybrid QoS policy that intelligently distinguishes and applies different QoS policy to the trusted and untrusted endpoints that are connected to the same port.

Deploying Ingress QoS

The ingress QoS policy at the access-switches needs to be established, since this is the trust boundary, where traffic enters the network. The following ingress QoS techniques are applied to provide appropriate service treatment and prevent network congestion:

• Trust—After classifying the endpoint the trust settings must be explicitly set by a network administrator. By default, Catalyst switches set each port in untrusted mode when QoS is enabled.

• Classification—IETF standard has defined a set of application classes and provides recommended DSCP settings. This classification determines the priority the traffic will receive in the network. Using the IETF standard, simplifies the classification process and improves application and network performance.

• Policing—To prevent network congestion, the access-layer switch limits the amount of inbound traffic up to its maximum setting. Additional policing can be applied for known applications, to ensure the bandwidth of an egress queue is not completely consumed by one application.

• Marking—Based on trust model, classification, and policer settings the QoS marking is set at the edge before approved traffic enters through the access-layer switching fabric. Marking traffic with the appropriate DSCP value is important to ensure traffic is mapped to the appropriate internal queue, and treated with the appropriate priority.

• Queueing—To provide differentiated services internally in the Catalyst switching fabric, all approved traffic is queued into priority or non-priority ingress queue. Ingress queueing architecture assures real-time applications, like VoIP traffic, are given appropriate priority (eg transmitted before data traffic).

Deploying Egress QoS

The QoS implementation for egress traffic toward the network edge on access-layer switches is much simpler than the ingress traffic QoS. The egress QoS implementation provides optimal queueing policies for each class and sets the drop thresholds to prevent network congestion and application performance impact. Cisco Catalyst switches support four hardware queues which are assigned the following policies:

• Real-time queue (to support a RFC 3246 EF PHB service)

• Guaranteed bandwidth queue (to support RFC 2597 AF PHB services)

• Default queue (to support a RFC 2474 DF service)

• Bandwidth constrained queue (to support a RFC 3662 scavenger service)

As a best practice, each physical or logical link must diversify bandwidth assignment to map with hardware queues:

• Real-time queue should not exceed 33% of the link's bandwidth.

• Default queue should be at least 25% of the link's bandwidth.

• Bulk/scavenger queue should not exceed 5% of the link's bandwidth.

Given these minimum queuing requirements and bandwidth allocation recommendations, the following application classes can be mapped to the respective queues:

• Realtime Queue—Voice, broadcast video, and realtime interactive may be mapped to the realtime queue (per RFC 4594).

• Guaranteed Queue—Network/internetwork control, signaling, network management, multimedia conferencing, multimedia streaming, and transactional data can be mapped to the guaranteed bandwidth queue. Congestion avoidance mechanisms (i.e., selective dropping tools), such as WRED, can be enabled on this class. If configurable drop thresholds are supported on the platform, these may be enabled to provide intra-queue QoS to these application classes, in the respective order they are listed (such that control plane protocols receive the highest level of QoS within a given queue).

• Scavenger/Bulk Queue—Bulk data and scavenger traffic can be mapped to the bandwidth-constrained queue and congestion avoidance mechanisms can be enabled on this class. If configurable drop thresholds are supported on the platform, these may be enabled to provide inter-queue QoS to drop scavenger traffic ahead of bulk data.

• Default Queue—Best effort traffic can be mapped to the default queue; congestion avoidance mechanisms can be enabled on this class.

Deploying Network Core QoS

All connections between internal network devices that are deployed within the network domain boundary are classified as trusted devices and follow the same QoS best practices recommended in the previous section. Ingress and egress core QoS policies are simpler than those applied at the network edge,

The core network devices are considered trusted and rely on the access-switch to properly mark DSCP values. The core network is deployed to ensure consistent differentiated QoS service across the network. This ensures there is no service quality degradation for high-priority traffic, such as IP telephony or video.

0 comments

Post a Comment